Description

Introduction of ETL and ELT with Snowflake

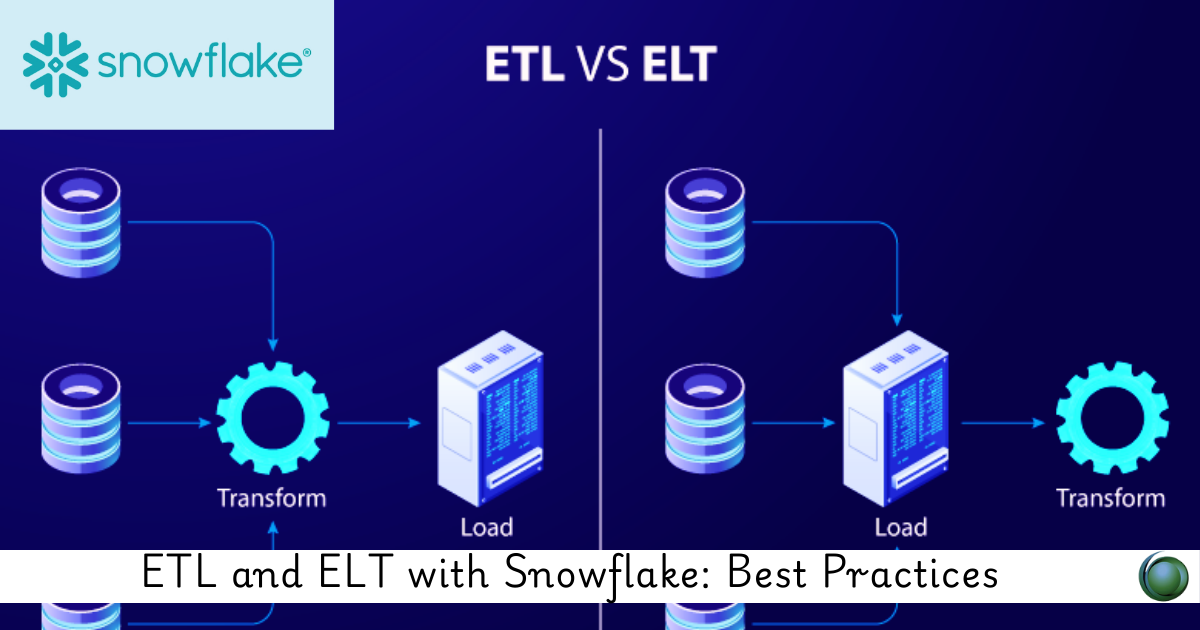

Snowflake is a powerful cloud data platform that supports both ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) approaches for data integration. Traditional ETL processes involve transforming data before loading it into the data warehouse, while ELT leverages Snowflake’s scalable computing capabilities to perform transformations after loading the raw data. Understanding the best practices for ETL and ELT with Snowflake is essential for optimizing data pipelines, improving performance, and reducing costs.

Prerequisites

- Basic knowledge of databases and SQL.

- Familiarity with data warehousing concepts.

- Understanding of ETL and ELT workflows.

Table of Contents

1. ETL vs. ELT: Understanding the Differences

1.1 Definition and Key Differences

1.2 When to Use ETL vs. ELT in Snowflake

1.3 Advantages of ELT in a Cloud Data Warehouse

2. Data Extraction Best Practices

2.1 Extracting Data from Various Sources (Databases, APIs, Files)

2.2 Using Snowflake Connectors for Efficient Data Ingestion

2.3 Handling High-Volume Data Extraction Efficiently

3. Loading Data into Snowflake (ELT Approach)

3.1 Snowflake’s Bulk Loading Methods (COPY Command, Snowpipe)

3.2 Best Practices for Using External Stages (AWS S3, Azure Blob, GCS)

3.3 Optimizing Data Load Performance

4. Data Transformation Strategies in Snowflake

4.1 Using SQL-Based Transformations in Snowflake(Ref: Snowflake Architecture and Performance Optimization)

4.2 Leveraging Snowflake Streams and Tasks for Incremental Updates

4.3 Materialized Views and Clustering for Performance Optimization

5. Performance Optimization for ETL and ELT Workflows

5.1 Best Practices for Query Performance in Transformations

5.2 Optimizing Virtual Warehouses for ETL Workloads

5.3 Using Caching to Improve ELT Efficiency

6. Automating Data Pipelines in Snowflake

6.1 Using Snowflake Tasks for Scheduled Data Transformations

6.2 Implementing Event-Driven Pipelines with Snowpipe

6.3 Orchestrating ETL and ELT Workflows with Apache Airflow and dbt

7. Handling Data Quality and Governance

7.1 Implementing Data Validation and Error Handling

7.2 Ensuring Data Consistency and Accuracy

7.3 Managing Schema Evolution and Versioning

8. Security and Compliance Considerations

8.1 Secure Data Ingestion and Transformation

8.2 Role-Based Access Control (RBAC) for Data Pipelines

8.3 Auditing and Monitoring ETL/ELT Workflows

9. Real-World Use Cases and Best Practices

9.1 Migrating from Traditional ETL to ELT in Snowflake

9.2 Optimizing ELT for Large-Scale Data Processing

9.3 Case Study: Implementing a Serverless ELT Pipeline

10. Conclusion and Next Steps

10.1 Key Takeaways for Efficient ETL and ELT in Snowflake

10.2 Future Trends in Cloud-Based Data Integration

10.3 Next Steps for Mastering Snowflake Data Pipelines

Snowflake’s cloud-native capabilities enable efficient ETL and ELT workflows by leveraging scalable compute resources, automated ingestion mechanisms, and powerful SQL-based transformations. By following best practices in data extraction, loading, transformation, and optimization, organizations can streamline data pipelines for improved performance, cost efficiency, and data governance. A well-implemented ETL/ELT strategy in Snowflake ensures seamless data integration, enabling faster insights and better decision-making.

Reviews

There are no reviews yet.