Description

Introduction of DataOps Fundamentals

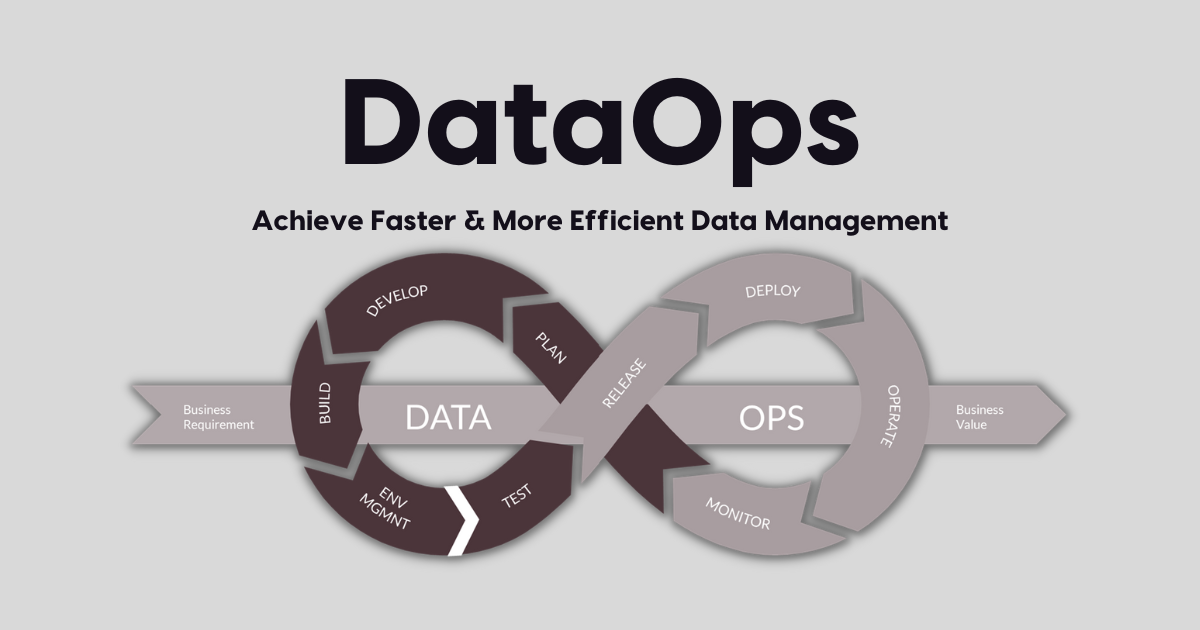

DataOps, or Data Operations, is a set of practices and tools designed to improve the quality and speed of data engineering and operations. It combines agile methodologies, DevOps practices, and advanced data management techniques to create a streamlined approach to data delivery, processing, and analytics. This course introduces the fundamentals of DataOps, focusing on the integration of data pipelines, automation, and real-time collaboration to ensure that high-quality data is delivered consistently and efficiently.

Prerequisites

Participants should have:

- A basic understanding of data engineering and data pipelines.

- Familiarity with databases, ETL (Extract, Transform, Load) processes, and data warehousing concepts.

- Experience with tools for data processing and analytics (e.g., SQL, Python, or cloud platforms) is beneficial but not mandatory.

- Familiarity with software development principles, especially agile methodologies and version control, will be helpful.

Table of Contents

- Introduction to DataOps

1.1 What is DataOps?

1.2 Key Principles of DataOps

1.3 The Importance of DataOps in Modern Data Engineering - Core Components of DataOps

2.1 Data Pipelines and Automation

2.2 Collaboration Across Teams

2.3 Monitoring and Quality Assurance - Building Efficient Data Pipelines

3.1 Designing Scalable Data Pipelines

3.2 Integrating Real-Time Data Processing

3.3 Automation for Continuous Data Delivery - DataOps Tools and Technologies

4.1 Overview of DataOps Tools (Airflow, Jenkins, etc.)

4.2 Version Control in DataOps(Ref: Implementing DataOps: Best Practices for Agile Data Management)

4.3 Using Containers and Kubernetes for Data Pipelines - Data Quality and Testing in DataOps

5.1 Ensuring Data Accuracy and Integrity

5.2 Automated Data Validation and Testing

5.3 Error Handling and Troubleshooting - Collaboration and Communication in DataOps

6.1 Enhancing Collaboration Between Data and DevOps Teams

6.2 Implementing Agile Methodologies in Data Operations

6.3 Best Practices for Cross-Team Communication - Monitoring and Performance in DataOps

7.1 Real-Time Monitoring of Data Pipelines

7.2 Key Metrics and KPIs for DataOps

7.3 Optimizing Pipeline Performance - Data Security and Governance in DataOps

8.1 Ensuring Data Privacy and Compliance

8.2 Securing Data Pipelines and Infrastructure

8.3 Governance in a DataOps Environment - Case Studies and Industry Applications

9.1 Successful DataOps Implementations

9.2 DataOps in Different Industries (Finance, Healthcare, etc.)

9.3 Lessons Learned and Challenges Faced - Future Trends in DataOps

10.1 The Role of AI and Machine Learning in DataOps

10.2 The Growing Need for Real-Time Data Processing

10.3 Innovations in Automation and Data Management

Conclusion

DataOps is transforming the way organizations manage their data pipelines and data operations. By adopting DataOps principles, companies can achieve faster, more reliable, and higher-quality data processing. This course provides participants with the necessary tools and knowledge to implement DataOps in their organizations, streamline workflows, and enhance collaboration between teams. The principles and practices of DataOps will help organizations stay competitive in the fast-evolving data landscape.

Reviews

There are no reviews yet.