Description

Introduction

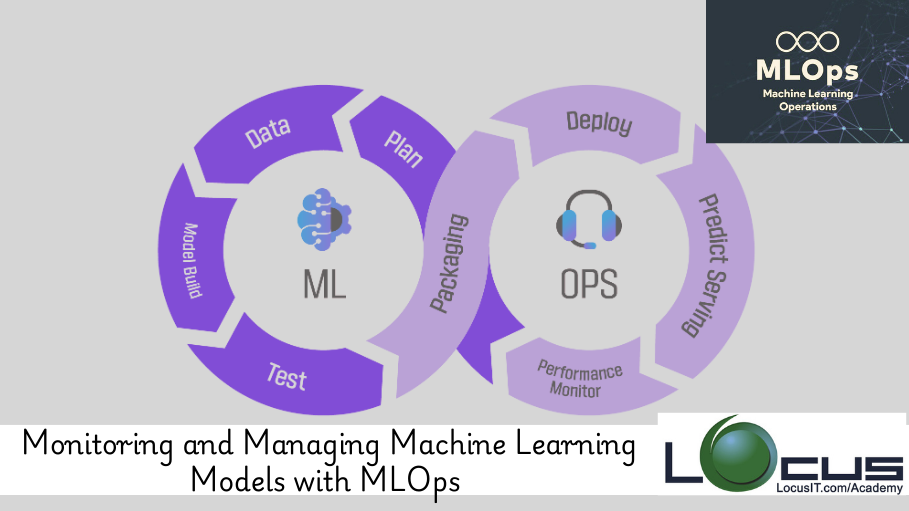

Once machine learning models are deployed in production, it becomes critical to continuously monitor their performance, detect any anomalies, and manage their lifecycle effectively. This course explores the best practices for monitoring and managing machine learning models, emphasizing MLOps principles. You’ll learn how to track model performance, detect model drift, automate retraining, and manage model versions, ensuring the reliability and longevity of your machine learning systems.

Prerequisites

- Basic understanding of machine learning and model deployment.

- Familiarity with MLOps concepts and tools (CI/CD, Docker, Kubernetes).

- Experience in Python and ML frameworks such as TensorFlow, PyTorch, or Scikit-learn.

- Knowledge of cloud platforms like AWS, Azure, or Google Cloud is beneficial.

Table of Contents

- Introduction to MLOps for Model Monitoring

1.1 Importance of Monitoring and Managing ML Models

1.2 Key Challenges in Model Management

1.3 Overview of MLOps for Model Performance and Monitoring - Setting Up Model Monitoring Infrastructure

2.1 Choosing the Right Tools for Monitoring (Prometheus, Grafana, etc.)

2.2 Integrating Model Monitoring with CI/CD Pipelines

2.3 Cloud-based Monitoring Solutions (AWS CloudWatch, Azure Monitor)

2.4 Setting Up Dashboards for Real-time Model Performance - Tracking Model Performance Metrics

3.1 Defining Key Performance Indicators (KPIs) for ML Models

3.2 Monitoring Accuracy, Precision, Recall, and Other Metrics

3.3 Evaluating Model Latency and Throughput in Production

3.4 Setting Thresholds and Alerts for Model Performance - Detecting Model Drift and Anomalies

4.1 What is Model Drift and Why Does It Occur?

4.2 Detecting Concept Drift and Data Drift(Ref: Versioning and Model Deployment: Key Strategies in MLOps )

4.3 Methods for Monitoring Feature Distribution Shifts

4.4 Implementing Automated Drift Detection and Alert Systems - Automating Model Retraining and Versioning

5.1 Building Retraining Pipelines in MLOps

5.2 Setting Triggers for Retraining (Drift, Performance Degradation, etc.)

5.3 Managing Model Versions and Deployments with Model Registries

5.4 Automating Model Rollbacks and Version Management - Managing Model Lifecycle with MLOps

6.1 End-to-End Model Lifecycle Management

6.2 Model Validation and Approval in the Deployment Pipeline

6.3 Continuous Integration and Continuous Delivery (CI/CD) for Model Updates

6.4 Using Model Registries for Storing and Managing Different Versions - Ensuring Model Interpretability and Transparency

7.1 Techniques for Model Explainability (LIME, SHAP, etc.)

7.2 Monitoring Model Interpretability in Production

7.3 Auditing Models for Bias and Fairness in Real-time

7.4 Transparency in Model Predictions for Business Stakeholders - Scaling Monitoring and Management with Cloud and Kubernetes

8.1 Using Kubernetes for Scaling Model Monitoring and Management

8.2 Leveraging Cloud-native Tools for Large-scale Model Deployment

8.3 Autoscaling Models and Monitoring Services with Kubernetes

8.4 Cloud-based Model Monitoring Pipelines for Continuous Operations - Security and Privacy in Model Monitoring

9.1 Ensuring Data Privacy in Model Monitoring (GDPR Compliance, etc.)

9.2 Securing Model APIs and Predictions

9.3 Role-based Access Control (RBAC) for Monitoring and Model Management

9.4 Securing Data Pipelines and Ensuring Model Integrity - Real-world Case Studies and Applications

10.1 Monitoring Financial Fraud Detection Models

10.2 Managing Sentiment Analysis Models for Real-time Customer Insights

10.3 Implementing Automated Retraining for Predictive Maintenance Models

10.4 Building an MLOps Pipeline for Healthcare Diagnostics - Hands-on Labs and Capstone Project

11.1 Setting Up Model Monitoring with Prometheus and Grafana

11.2 Implementing Drift Detection and Retraining Triggers in a Model Pipeline

11.3 Using MLflow for Model Versioning and Deployment Management

11.4 Capstone Project: Design a Full MLOps Pipeline for Model Monitoring, Drift Detection, and Retraining

Conclusion

By mastering the monitoring and management of machine learning models, you’ll ensure their continued performance and relevance in production. This course provides the tools and techniques needed to detect issues like model drift, automate retraining, and manage versions, enabling more efficient, transparent, and secure machine learning operations. With a strong emphasis on MLOps principles, you’ll be equipped to manage ML models at scale, ensuring they deliver consistent value over time.

Reviews

There are no reviews yet.