Description

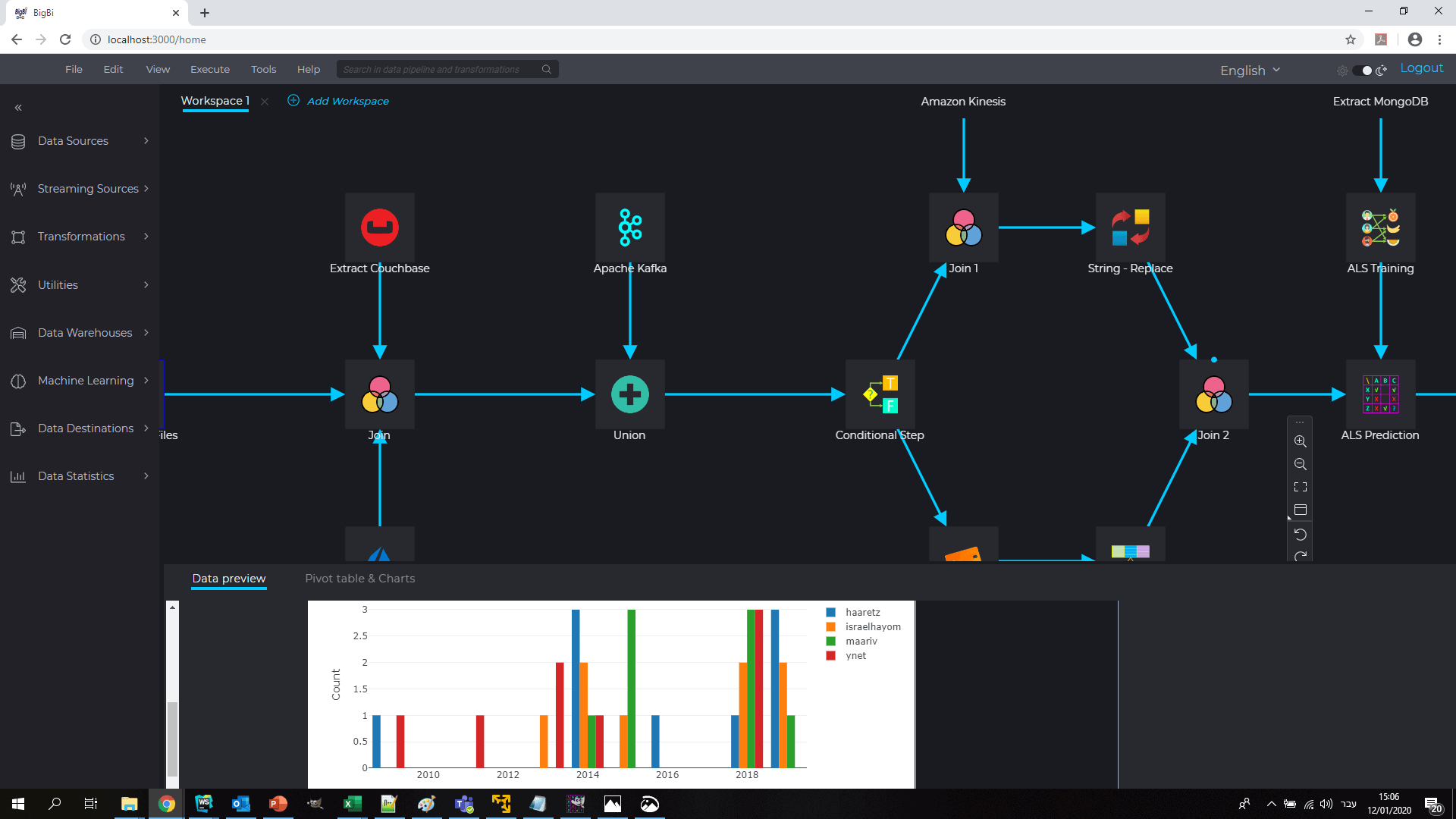

Introduction of Data Transformation with Apache Spark & Java:

Data transformation and Extract, Transform, Load (ETL) processes are fundamental for integrating and preparing data for analysis in modern data ecosystems. This course is designed to provide a deep understanding of how to perform data transformation and ETL tasks using Apache Spark with Java. Apache Spark’s powerful distributed processing capabilities make it an ideal framework for handling large-scale data transformations and ETL operations efficiently.

Participants will learn how to build robust ETL pipelines, perform complex data transformations, and integrate Spark with various data sources and sinks. The course emphasizes practical skills and includes hands-on exercises to help participants gain experience in developing and optimizing data transformation workflows with Spark and Java.

Prerequisites:

- Proficiency in Java programming

- Basic understanding of Apache Spark (core concepts such as RDDs, DataFrames, and Datasets)

- Familiarity with data processing concepts and ETL principles

- Experience with SQL and data manipulation (optional, but beneficial)

- Basic knowledge of distributed computing (optional, but recommended)

Table of Contents:

1: Introduction to Data Transformation and ETL

1.1 Overview of data transformation and ETL processes

1.2 Importance of ETL in data integration and analytics

1.3 Introduction to Apache Spark and its role in ETL

1.4 Key components of Spark: Core, SQL, Streaming(Ref: SnowPro® Core Certification)

2: Setting Up Apache Spark for ETL Development

2.1 Installing and configuring Apache Spark for ETL tasks

2.2 Setting up a Java development environment for Spark

2.3 Overview of Spark dependencies and project structure

2.4 Running Spark applications for ETL operations

3: Data Ingestion and Integration

3.1 Loading data from various sources (HDFS, S3, JDBC, etc.)

3.2 Reading and writing different data formats (CSV, JSON, Avro, Parquet)

3.3 Integrating Spark with external databases and data warehouses

3.4 Handling schema evolution and data format conversions

4: Data Transformation with Spark

4.1 Understanding Spark’s data transformation capabilities

4.2 Performing data cleaning and preprocessing tasks

4.3 Applying transformations: Filtering, aggregating, and joining data

4.4 Handling missing values and outliers

5: Building ETL Pipelines with Apache Spark

5.1 Designing and implementing end-to-end ETL pipelines

5.2 Developing batch and streaming ETL processes with Spark

5.3 Using Spark SQL for complex data transformations

5.4 Building reusable ETL components and modular pipelines

6: Optimizing ETL Processes

6.1 Performance optimization strategies for Spark ETL tasks

6.2 Managing data partitioning, caching, and shuffling

6.3 Tuning Spark configurations for better performance

6.4 Monitoring and troubleshooting ETL jobs

7: Advanced ETL Techniques

7.1 Working with complex and nested data structures

7.2 Implementing custom transformations with User-Defined Functions (UDFs)

7.3 Handling large-scale data transformations and optimizations

7.4 Integrating Spark ETL processes with other big data tools

8: Case Studies and Hands-On Projects

8.1 Real-world case studies of data transformation and ETL projects

8.2 Hands-on project: Building a complete ETL pipeline with Spark and Java

8.3 Analyzing and optimizing a sample ETL project

8.4 Addressing common challenges and solutions in ETL development

9: Deployment and Production Readiness

9.1 Deploying ETL pipelines on various cluster managers (YARN, Mesos, Kubernetes)

9.2 Managing and monitoring ETL jobs in production environments

9.3 Ensuring data quality, security, and compliance

9.4 Strategies for scaling and maintaining ETL pipelines

10: Future Trends and Further Learning

10.1 Emerging trends in ETL and data transformation technologies

10.2 Resources for continued learning and professional development

10.3 Exploring advanced topics: Real-time ETL with Spark Streaming

Conclusion and Summary

- Recap of key concepts and techniques covered in the course

- Practical takeaways and applications for data transformation and ETL

- Next steps for advancing skills and exploring further opportunities

Reviews

There are no reviews yet.