Description

Introduction

DataOps Automation is the practice of using automated processes and tools to streamline and optimize the flow of data across an organization’s pipelines and workflows. The goal is to enhance efficiency, reduce errors, and ensure that data is processed quickly and reliably. This approach integrates automation, DevOps practices, and agile methodologies to continuously deliver high-quality data to support analytics, machine learning, and decision-making. In this course, we will explore best practices for automating data operations, optimizing workflows, and implementing a robust DataOps strategy for enhanced data delivery and business outcomes.

Prerequisites

Participants should have:

- Basic understanding of data management and data pipelines.

- Familiarity with ETL (Extract, Transform, Load) processes and cloud platforms (AWS, Azure, GCP).

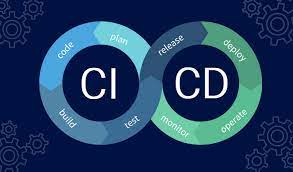

- Knowledge of DevOps practices, such as version control, CI/CD pipelines, and containerization.

- Understanding of data quality and governance principles is beneficial.

Table of Contents

- Introduction to DataOps Automation

1.1 What is DataOps Automation?(Ref: )

1.2 Importance of Automation in Data Pipelines

1.3 Key Benefits of Automating Data Operations - Designing Automated Data Pipelines

2.1 Best Practices for Data Pipeline Design

2.2 Building Efficient, Scalable Pipelines

2.3 Automating Data Ingestion, Transformation, and Loading - Tools for DataOps Automation

3.1 Popular Tools for Automating Data Pipelines (Apache Airflow, Jenkins, etc.)

3.2 Leveraging Cloud Services for DataOps Automation (AWS Data Pipeline, Azure Data Factory)

3.3 Integration with Data Processing Tools (Spark, Kafka, etc.) - Continuous Integration and Continuous Deployment (CI/CD) for Data

4.1 Automating Testing and Deployment for Data Pipelines

4.2 Version Control for Data (Git for Data Pipelines)

4.3 Automating Rollbacks and Managing Errors in Data Pipelines - Automating Data Quality Assurance

5.1 Implementing Automated Data Validation

5.2 Data Cleaning and Error Detection Automation

5.3 Building Automated Monitoring for Data Quality - Orchestrating Data Workflows

6.1 Designing and Automating Complex Data Workflows

6.2 Workflow Automation with Data Processing Tools

6.3 Managing Dependencies and Scheduling Data Tasks - Monitoring and Managing Automated Data Pipelines

7.1 Real-Time Monitoring of Data Pipelines

7.2 Automation of Alerts and Notifications for Data Failures

7.3 Performance Metrics and Optimization for Data Pipelines - Security and Compliance in Automated Data Operations

8.1 Ensuring Data Security in Automated Pipelines

8.2 Managing Compliance in Data Pipelines (GDPR, HIPAA)

8.3 Best Practices for Securing Automated Data Flows - Scaling DataOps Automation for Enterprise Environments

9.1 Strategies for Scaling Data Pipelines(Ref: DataOps for Cloud Environments: Scaling and Securing Data Operations )

9.2 Automating Large-Scale Data Processing

9.3 Managing Data Growth with Automation - Future of DataOps Automation

10.1 Innovations in DataOps and Automation Technologies

10.2 The Role of Machine Learning in Automating Data Pipelines

10.3 Evolving Trends in DataOps Automation

Conclusion

DataOps automation plays a critical role in enabling organizations to efficiently manage large volumes of data and deliver high-quality insights with minimal manual intervention. By automating the creation, validation, deployment, and monitoring of data pipelines, businesses can ensure faster, more reliable data delivery and reduce errors associated with manual processes. As data continues to grow in volume and complexity, the ability to automate data operations will become increasingly essential for organizations looking to maintain agility, improve decision-making, and stay competitive in an increasingly data-driven world.

Reviews

There are no reviews yet.