Description

Introduction

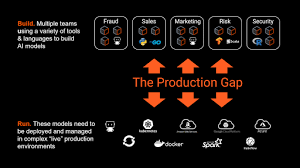

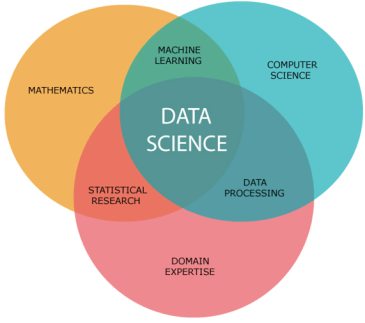

DataRobot provides an AI-powered platform that enables automated machine learning and model deployment at scale. This course focuses on leveraging DataRobot’s tools for model monitoring, ensuring that deployed models continue to perform accurately in production environments. You’ll learn best practices for tracking model performance, detecting drift, and maintaining model health over time to ensure that models adapt to changing data patterns.

Prerequisites

- Familiarity with machine learning concepts and algorithms

- Basic understanding of DataRobot platform functionality

- Experience with model deployment and performance metrics

Table of contents

- Introduction to Model Monitoring in DataRobot

1.1 Overview of DataRobot Model Deployment and Monitoring Features

1.2 The Importance of Monitoring Models in Production

1.3 Key Metrics for Model Performance: Accuracy, Precision, Recall, AUC, etc.

1.4 DataRobot’s Auto-Model Monitoring and Alerts - Setting Up Model Monitoring in DataRobot

2.1 Understanding Model Monitoring Tools in DataRobot

2.2 How to Set Up and Configure Model Monitoring for Deployed Models

2.3 Establishing Performance Benchmarks and Baselines

2.4 Using Model Performance Dashboards for Ongoing Monitoring - Tracking Model Drift and Concept Drift

3.1 What is Model Drift and Why Does it Matter?

3.2 Identifying Data Drift, Concept Drift, and Feature Drift in Production

3.3 Using DataRobot’s Drift Detection Features(Ref: Cloud-Native Application Monitoring: Leveraging Prometheus for Scalable Insights)

3.4 Best Practices for Managing Drift and Triggering Model Retraining - Analyzing Model Performance in Real Time

4.1 Real-Time Monitoring vs Batch Monitoring: Which to Choose?

4.2 Tracking Model Inputs and Outputs over Time

4.3 Using DataRobot’s Performance Evaluation Tools

4.4 Handling Performance Degradation and Implementing Remediation Strategies - Model Retraining and Model Updating

5.1 Understanding When and Why to Retrain Models

5.2 Automating Model Retraining with DataRobot

5.3 Retraining Based on New Data, Feature Changes, or Performance Degradation

5.4 Managing the Life Cycle of Deployed Models: Version Control and Rollbacks - Ensuring Ethical and Fair Model Performance

6.1 Monitoring for Bias and Unintended Consequences

6.2 Detecting Bias in Real-Time: Tools and Techniques

6.3 Leveraging DataRobot’s Fairness Metrics to Ensure Ethical Decisions

6.4 Implementing Bias Mitigation Techniques and Best Practices - Advanced Model Monitoring Strategies

7.1 Leveraging Custom Monitoring Dashboards for Deep Insights

7.2 Monitoring Complex Models: Ensemble, Stacked, and Custom Models

7.3 Implementing Advanced Alerting Mechanisms for Anomalies and Failures

7.4 Integrating with Third-Party Tools for Enhanced Model Monitoring - Troubleshooting and Optimizing Model Performance

8.1 Identifying Common Model Performance Issues in Production

8.2 Using DataRobot’s Diagnostics and Troubleshooting Tools

8.3 Optimizing Model Efficiency and Resource Consumption in Production

8.4 Collaborating with Data Scientists for Continuous Improvement - Automating the Model Monitoring Process

9.1 Creating Automated Workflows for Continuous Monitoring

9.2 Scheduling Performance Reports and Alerts in DataRobot

9.3 Integrating Model Monitoring with CI/CD Pipelines for Seamless Updates

9.4 Leveraging API and Webhooks for Model Monitoring Automation - Case Studies and Real-World Applications

10.1 Industry Case Studies: How Leading Organizations Use Model Monitoring

10.2 Best Practices from DataRobot’s Enterprise Clients

10.3 Real-World Scenarios: Addressing Common Monitoring Challenges

10.4 Collaborative Tools and Reporting for Cross-Functional Teams - Capstone Project: Monitoring a Deployed Model in Production

11.1 Setting Up a Full Monitoring Pipeline for a Deployed Model

11.2 Implementing Alerts, Drift Detection, and Performance Tracking

11.3 Documenting Findings and Recommending Improvements

11.4 Preparing a Final Report for Stakeholders

Conclusion

Upon completing this course, you will be equipped with the tools and knowledge necessary to monitor machine learning models effectively in a production environment using DataRobot. You’ll learn how to track performance, detect issues such as drift, retrain models when necessary, and ensure the models continue to provide accurate and reliable results over time. This training will help you maintain high standards of performance and compliance, allowing your machine learning initiatives to remain successful and sustainable.

Reviews

There are no reviews yet.