Description

Introduction

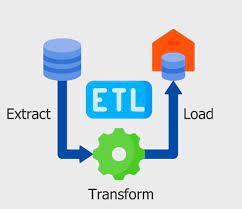

In the world of data engineering, extracting, transforming, and loading (ETL) data is an essential process that allows businesses to make sense of vast amounts of raw data. ETL tools are key to automating and streamlining this process, enabling seamless integration and transformation of data from multiple sources into meaningful datasets for analysis, reporting, and decision-making. This course will guide you through using popular ETL tools to integrate and transform data from various sources, focusing on improving your workflow efficiency and ensuring data quality.

Throughout the course, you will learn to use tools like Apache Nifi, Talend, and Microsoft SQL Server Integration Services (SSIS) for performing ETL tasks. You will explore data extraction, cleansing, transformation, and loading, with practical applications to help you build scalable, automated ETL pipelines that can process large volumes of data from heterogeneous systems. By mastering these tools, you will be equipped to handle complex data integration challenges.

Prerequisites

- Basic knowledge of data engineering and database management.

- Familiarity with SQL and relational databases (SQL Server, MySQL, etc.).

- Understanding of data transformation concepts and their importance in analytics.

- Basic understanding of cloud platforms (optional but helpful).

Table of Contents

- Introduction to ETL and Data Engineering

1.1 What is ETL and Why is it Important for Data Engineering?

1.2 Overview of the ETL Process: Extract, Transform, Load

1.3 Introduction to ETL Tools and Their Role in Data Integration

1.4 Key Concepts in Data Transformation: Data Quality, Data Cleansing, and Normalization - Overview of Popular ETL Tools

2.1 Introduction to Apache Nifi for Data Integration

2.2 Using Talend for Data Transformation and Loading

2.3 Microsoft SQL Server Integration Services (SSIS) for ETL Automation

2.4 Comparing Open-Source vs. Commercial ETL Tools - Extracting Data from Multiple Sources

3.1 Connecting to Relational Databases for Data Extraction

3.2 Extracting Data from Cloud Sources (AWS S3, Azure Blob Storage)

3.3 Integrating with APIs and Web Services for Data Extraction

3.4 Working with Semi-Structured and Unstructured Data (JSON, XML, etc.) - Data Transformation Techniques

4.1 Data Cleansing: Handling Missing and Duplicate Data

4.2 Data Normalization and Standardization

4.3 Complex Data Transformations: Aggregations, Joins, and Lookups

4.4 Using Scripts for Custom Data Transformations (Python, SQL) - Loading Data into Target Systems

5.1 Loading Data into Relational Databases (MySQL, PostgreSQL, SQL Server)

5.2 Writing Data to Data Warehouses and Data Lakes (Redshift, BigQuery, Hadoop)

5.3 Loading Data into Cloud Storage (AWS S3, Azure Blob Storage, GCP Storage)

5.4 Using Batch and Real-Time Data Loading Methods - Orchestrating ETL Pipelines

6.1 Automating ETL Workflows with Apache Nifi

6.2 Scheduling and Monitoring ETL Jobs with Talend

6.3 Using SSIS for Data Pipeline Orchestration

6.4 Integrating with Apache Airflow for Advanced Workflow Management - Optimizing ETL Processes

7.1 Best Practices for Performance Tuning ETL Pipelines

7.2 Parallel Processing and Distributed Data Processing

7.3 Handling Large Datasets and Big Data in ETL Pipelines

7.4 Managing ETL Failures and Error Handling - Data Quality and Testing in ETL

8.1 Ensuring Data Quality in ETL Pipelines

8.2 Using Data Profiling and Validation Techniques

8.3 Automated Testing for ETL Processes

8.4 Monitoring and Auditing Data Quality - Scaling and Managing ETL Workflows

9.1 Scaling ETL Pipelines for High-Volume Data

9.2 Using Cloud-Based ETL Tools for Scalability

9.3 Managing ETL Jobs in Distributed Systems

9.4 Version Control and Documentation in ETL Projects - Real-World Use Cases and Best Practices

10.1 Building an End-to-End ETL Pipeline with Apache Nifi

10.2 Data Integration and Transformation in the Cloud

10.3 Implementing Real-Time ETL Pipelines with Kafka and Nifi

10.4 Best Practices for Maintaining and Optimizing ETL Systems

Conclusion

By completing this course, you will gain practical experience with the most commonly used ETL tools for automating and managing data integration processes. You will have learned how to extract, transform, and load data from various sources into different target systems, ensuring data quality and scalability. Additionally, you’ll be equipped to orchestrate and automate complex data workflows using industry-leading tools such as Apache Nifi, Talend, and SSIS.

Mastering ETL tools and best practices will significantly improve your ability to manage large datasets, automate repetitive data tasks, and integrate data from diverse sources. With this knowledge, you’ll be prepared to tackle real-world data engineering challenges and contribute to building efficient, scalable, and maintainable data pipelines in any organization.

Reviews

There are no reviews yet.